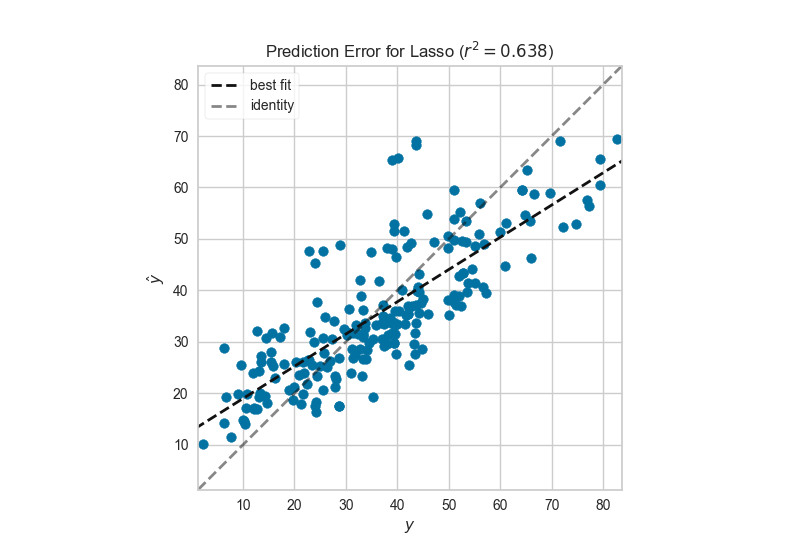

Prediction Error Plot¶

A prediction error plot shows the actual targets from the dataset against the predicted values generated by our model. This allows us to see how much variance is in the model. Data scientists can diagnose regression models using this plot by comparing against the 45 degree line, where the prediction exactly matches the model.

# Load the data

df = load_data('concrete')

feature_names = ['cement', 'slag', 'ash', 'water', 'splast', 'coarse', 'fine', 'age']

target_name = 'strength'

# Get the X and y data from the DataFrame

X = df[feature_names].as_matrix()

y = df[target_name].as_matrix()

# Create the train and test data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Instantiate the linear model and visualizer

lasso = Lasso()

visualizer = PredictionError(lasso)

visualizer.fit(X_train, y_train) # Fit the training data to the visualizer

visualizer.score(X_test, y_test) # Evaluate the model on the test data

g = visualizer.poof() # Draw/show/poof the data

API Reference¶

Regressor visualizers that score residuals: prediction vs. actual data.

-

class

yellowbrick.regressor.residuals.PredictionError(model, ax=None, shared_limits=True, bestfit=True, identity=True, **kwargs)[源代码]¶ 基类:

yellowbrick.regressor.base.RegressionScoreVisualizerThe prediction error visualizer plots the actual targets from the dataset against the predicted values generated by our model(s). This visualizer is used to dectect noise or heteroscedasticity along a range of the target domain.

Parameters: - model : a Scikit-Learn regressor

Should be an instance of a regressor, otherwise a will raise a YellowbrickTypeError exception on instantiation.

- ax : matplotlib Axes, default: None

The axes to plot the figure on. If None is passed in the current axes will be used (or generated if required).

- shared_limits : bool, default: True

If shared_limits is True, the range of the X and Y axis limits will be identical, creating a square graphic with a true 45 degree line. In this form, it is easier to diagnose under- or over- prediction, though the figure will become more sparse. To localize points, set shared_limits to False, but note that this will distort the figure and should be accounted for during analysis.

- besfit : bool, default: True

Draw a linear best fit line to estimate the correlation between the predicted and measured value of the target variable. The color of the bestfit line is determined by the

line_colorargument.- identity: bool, default: True

Draw the 45 degree identity line, y=x in order to better show the relationship or pattern of the residuals. E.g. to estimate if the model is over- or under- estimating the given values. The color of the identity line is a muted version of the

line_colorargument.- point_color : color

Defines the color of the error points; can be any matplotlib color.

- line_color : color

Defines the color of the best fit line; can be any matplotlib color.

- kwargs : dict

Keyword arguments that are passed to the base class and may influence the visualization as defined in other Visualizers.

Notes

PredictionError is a ScoreVisualizer, meaning that it wraps a model and its primary entry point is the score() method.

Examples

>>> from yellowbrick.regressor import PredictionError >>> from sklearn.linear_model import Lasso >>> model = PredictionError(Lasso()) >>> model.fit(X_train, y_train) >>> model.score(X_test, y_test) >>> model.poof()

-

draw(y, y_pred)[源代码]¶ Parameters: - y : ndarray or Series of length n

An array or series of target or class values

- y_pred : ndarray or Series of length n

An array or series of predicted target values

- Returns

- ------

- ax : the axis with the plotted figure

-

finalize(**kwargs)[源代码]¶ Finalize executes any subclass-specific axes finalization steps. The user calls poof and poof calls finalize.

Parameters: - kwargs: generic keyword arguments.

-

score(X, y=None, **kwargs)[源代码]¶ The score function is the hook for visual interaction. Pass in test data and the visualizer will create predictions on the data and evaluate them with respect to the test values. The evaluation will then be passed to draw() and the result of the estimator score will be returned.

Parameters: - X : array-like

X (also X_test) are the dependent variables of test set to predict

- y : array-like

y (also y_test) is the independent actual variables to score against

Returns: - score : float